Artificial Intelligence isn’t just about understanding text or making predictions—it’s also about seeing. And when it comes to AI understanding visual information, Convolutional Neural Networks (CNNs) are the go-to architecture.

If you’ve ever wondered how your phone unlocks using facial recognition or how AI systems can identify objects in pictures, the answer lies in CNNs. So, let’s explore what makes CNNs unique and why they’ve become such a powerful tool in image recognition.

The Basics: What Are CNNs?

Imagine you’re at a crowded concert, and you’re trying to spot your friend. You don’t scan the entire crowd at once; you start by focusing on smaller sections—looking for specific features like their hairstyle or clothing. CNNs work in a similar way.

While a traditional neural network treats every pixel in an image equally, a CNN breaks the image into smaller sections and looks for features like edges, textures, or shapes. By doing this, the network becomes excellent at recognizing patterns, whether it’s a cat, a face, or a stop sign.

How CNNs Work: Layers Upon Layers

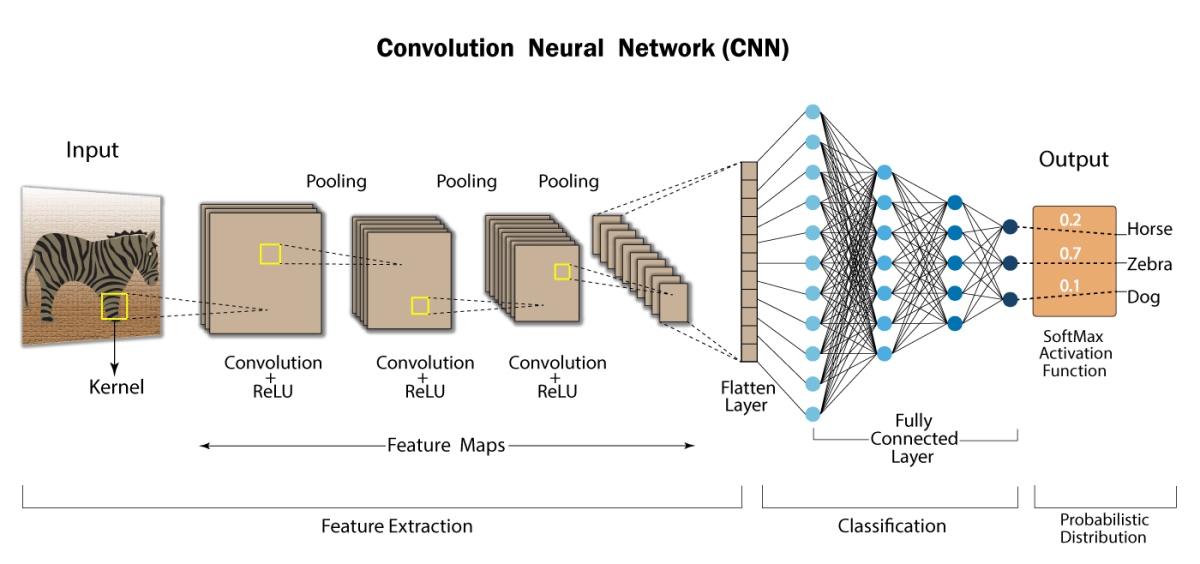

What makes CNNs stand out is their layered structure. Each layer of a CNN focuses on detecting different levels of information from an image. Here's how the process works:

Convolutional Layer: This is where the magic happens. The network applies filters (also called kernels) that move over the image, scanning small patches at a time. Each filter detects specific features like edges or colors. Think of this as your brain recognizing simple patterns in an image like straight lines or circles.

Pooling Layer: Once features are detected, the network reduces the amount of information while keeping the most important parts. Pooling can be thought of like summarizing—imagine looking at a picture of a car, but instead of focusing on every detail, you condense it into something like "has four wheels and a windshield."

Fully Connected Layer: After the network has detected the important features, it uses this information to classify the image. This final layer determines what the image represents, whether it’s a dog, a tree, or a building.

Why CNNs Are So Good at Vision

Here’s an analogy: Imagine trying to identify a person in a blurry photo. You don’t need to see every single detail to recognize them—you just need to identify key features like their eyes, hair, or nose. CNNs work the same way. By focusing on important features and ignoring unnecessary details, CNNs excel at recognizing objects in images, even when the picture isn’t perfect.

And this ability to generalize is what makes CNNs so useful. You can train a CNN to recognize thousands of different objects, and it can learn to identify those objects in a wide range of settings—different angles, lighting conditions, or even partial obstructions.

Real-World Applications

CNNs have revolutionized fields like image recognition, medical imaging, and even autonomous driving. Here are a few examples of how CNNs are transforming industries:

Facial Recognition: CNNs power facial recognition systems on smartphones, in security cameras, and even at airports. The network is trained to recognize unique facial features, allowing it to accurately identify individuals.

Medical Imaging: CNNs are used to detect tumors or anomalies in medical scans. By analyzing patterns in X-rays or MRIs, CNNs can help doctors make more accurate diagnoses.

Self-Driving Cars: CNNs help autonomous vehicles "see" the road by identifying pedestrians, vehicles, and road signs. By analyzing visual data in real-time, the car can make split-second decisions.

The Challenges: Why It’s Not All Smooth Sailing

While CNNs are incredibly powerful, they’re not without challenges. One common issue is that CNNs require a large amount of labeled data to train effectively. In other words, if you want a CNN to recognize thousands of different objects, you need a lot of images and corresponding labels (like “cat,” “dog,” “tree”).

Additionally, CNNs can struggle with bias—if the training data isn’t diverse enough, the network might not generalize well. For example, if a CNN is only trained on images of pedestrians in sunny conditions, it might have trouble recognizing pedestrians in the rain.

Final Thoughts

CNNs have opened up a world of possibilities in how machines can "see" the world around them. Whether it's in healthcare, transportation, or security, CNNs are pushing the boundaries of what AI can achieve in vision-based tasks.

By focusing on the essential features of images and processing them efficiently, CNNs have become one of the most significant advancements in AI over the past decade. As we move forward, the applications of CNNs will continue to expand, unlocking new potential in how we interact with machines and how they interpret the world around us.

Next Article Preview: "Reinforcement Learning: Teaching AI Through Trial and Error"

Next, we’ll dive into the world of reinforcement learning—how AI systems learn to make decisions through trial and error, just like humans.